Every software engineers are very familiar with concept of unit test. At one particular environment, the level of unit testing varies from one project without any proper unit tests to another project where writing tests are a more time-consuming task than the actual work for the code running in production.

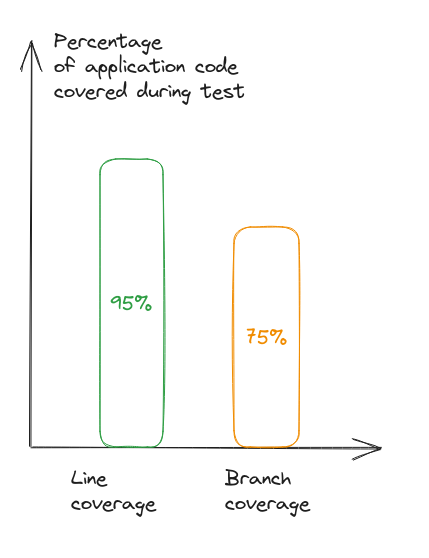

As said, the two popular metric for unit testing from most projects I have been through are:

- Coverage of line (COL) or line coverage

- Coverage of branch (COB) or branch coverage

Normally, COL is around 90 to 95% application when test suite executed and COB is around 85 to 90%. That’s the promise of many out-sourcing companies give to customer as a trusted software vendor. And in java space, I would say that with jacoco report, those metrics mostly give us the sense of quality product. The higher the thresholds are, the better nails we put into our test code to prevent regression issues.

Example for coverage:

Example for coverage:

So, that’s it. At the end of the day, they are simple a collection of small tests, dirt cheap to run and get feedback for every change we put into production. Software engineers follow that guidance to implement production code and unit tests to meet the expectation from the project. There is no magic about this until recently I have a chance to work on a project where the team push unit tests into a direction where they have three different kind of “test types”, and they are quite confusing to me. Even senior members of the project cannot answer group definition confidently.

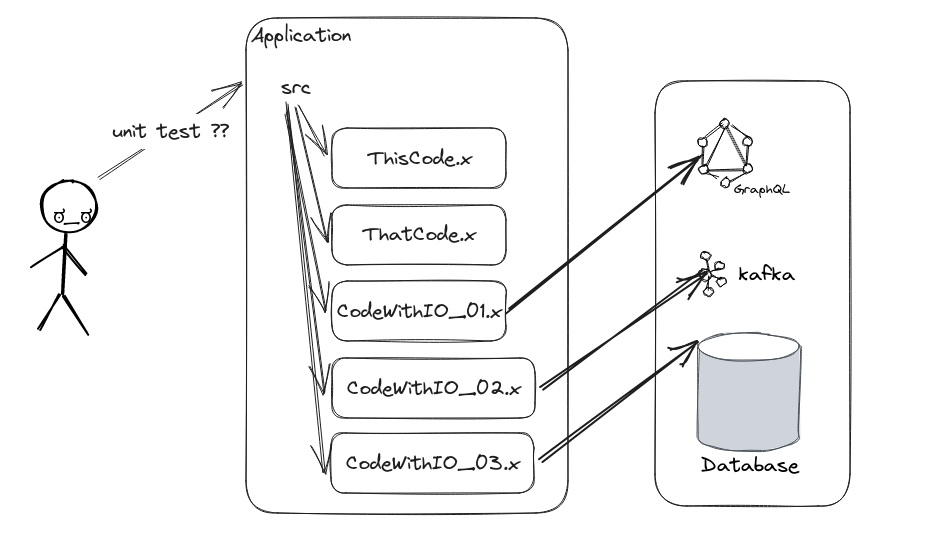

Let’s say we have a sample web application (at any programming language which has modularity) as below diagram:

So the application mostly is composed of:

- One or more files which define entry points for the application.

- Some files contain logic for the application: validation, filling missing data according to business requirement…

- Some files deal with persistent data to a database, consuming and HTTP API (rest or graphql), writing or reading data from disk, interact with Kafka broker…etc

- Due to modularity, one particular file can import and reuse functionality of other files.

So when one software engineer follow common sense guidance for unit testing metrics: COL and COB, most likely that engineer will just write unit tests cover piece by piece of each file from application source code. They are all unit tests.

But hell no, the team don’t see unit test that way. They invent three terms used within project with following description:

| Term | Description | Example |

|---|---|---|

| unit test | Tests cover single file | Test covers all functions of file ThisCode.x alone |

| component test | Tests cover two or more files - let’s say using modularity | Test covers functionality when ThisCode.x and ThatCode.x having interaction |

| blackbox test | Tests from application entry points which require a running application | Test cover when request is triggered through HTTP endpoint, Kafka topic |

For unit test term, it is fair enough. For blackbox test, quite often I see people call this as application integration tests. Those tests verify integration from the application view point which means that it must work well not only by

application source files but also require any particular external dependent software such as database, Kafka broker,

files storage…etc. The standing point here is: at application front, and the integration here is from application to

mandatory software dependencies. Please notice that for integration: we need to clarify standing point of our

integration and which direction we are looking into:

- If we look inward our application -> we perform integration test at application level.

- If we look outward our application seeking for being consumed and consume other applications -> we perform solution integration.

When you work on a complex solution, integration test at solutions level is beyond those blackbox test where each

application only a part of the picture. People may call this integration tests whatever term they want but at the end of

the day, those kind of tests are very high in cost, need planning, coordination across applications (aka development

teams).

So this leaves me to the last term component test. This term and description gives me a pause…is it really need to

be

that way? Isn’t regular unit test at caller point between those files will cover this requirement?

Let’s say we have pseudocode at source file caller.x where uses both ThisCode.x and ThatCode.x:

// pseudocode

import getThis, doThis, doThis2, doThis3 from './ThisCode'

import getThat, getSemiFinal, getFinal, doThat, computeThat from './ThatCode'

// a function uses both ThisCode and ThatCode

fun callerUseBothThisAndThat() {

callerDo1()

doThis()

const thisFlag = getThis()

const thatFlag = getThat()

const computed = computeThat()

if (thisFlag && thatFlag) {

callerDo1()

doThis2(getFinal(), computed)

} else {

callerDo2()

doThis3(getSemiFinal(), computed)

}

//some code without any ThisCode and ThatCode usage

fun callerDo1() {}

//some code without any ThisCode and ThatCode usage

fun callerDo2() {}

fun callerUseDoThis() {

callerDo1()

// some code with ThisCode usage

doThis()

}

fun callerUseDoThat() {

callerDo2()

// some code with ThatCode usage

doThat()

}

fun entryPoint() {

// some code

callerDo1()

doThis()

doThat()

}From the list of functions, we can see that tests of:

callerDo1(),callerDo2()tests areunit test- all

callerUseDoThis(),callerUseDoThat()andcallerUseBothThisAndThat()arecomponent tests entryPoint()tests areblackbox test

This is because callerDo1() and callerDo2() only relates to the caller source file only while all other three

functions callerUseDoThis(), callerUseDoThat() and callerUseBothThisAndThat() do involve another source files. And

then tests for entryPoint() function is blackbox tests.

Now consider another function:

//pseudocode

fun callerDo3() {

doThat()

computeThat()

}This piece of code spreads execution from caller.x to ThatCode.x but should we call this component test

or unit test since it only involves functionality from 1 source file?

It seems to me that the term component test integrates source files. It might be integration test with:

- standing point is the application source root directory, direction is looking inward

- integration scope here is application source files

Hang on a minute, is it the purpose of a regular unit test? We always have caller and callee in our application. Our

unit tests cover caller and callee by mocking required inputs combinations to cover COL and COB metric. Unit tests

themselves, covered source file integration already! In my opinion, this is unnecessary terms and have a doubtful

approach for writing test.

Gradually, the pause moment of mine get bigger and bigger. I think it should be a better way to explain component from

application perspective and base on that we develop component test type as unit test layer. This will be part 2 of

this blog entry.

P/S:

- I prefer just simple plain old

unit testterm. That’s it. No BS around calling a wellknown thing with new buzzing names. - line coverage (COL) and branch coverage (COB) are king and queen for unit test. We must stick on these metrics.